The basic idea:

This is a really cool way to extend your flexibility in post-production. The concept is that rather than render RGB passes for the scene (or each light or whatever), you actually assign each light to a color channel and render each lighting pass with three individual light passes included. In essence, each light pass (render) will include one light in the red channel, one in the blue and one in the green. These are then used to reveal an ambient color pass of the scene based on each light channel. Let me show you with a simple example.

Example of RGB Light Passes (a simple version for clarity):

Let's say this is my scene. I'll do a simple 3 point light setup with a key light (directional), a fill (spot) and a rim (spot). I've added a bit of simple color to the character to make things more clear. Normally, I'd set up the lights, adjust their color and render a beauty pass (diffuse +/- spec, etc). maybe I'd add a shadow pass, amb occlusion, other passes, etc. But the idea would be that certainly the beauty pass would include all the lights. If I wanted to break out the lights for more control I would render a seperate RGBA pass for each light.

Let's say this is my scene. I'll do a simple 3 point light setup with a key light (directional), a fill (spot) and a rim (spot). I've added a bit of simple color to the character to make things more clear. Normally, I'd set up the lights, adjust their color and render a beauty pass (diffuse +/- spec, etc). maybe I'd add a shadow pass, amb occlusion, other passes, etc. But the idea would be that certainly the beauty pass would include all the lights. If I wanted to break out the lights for more control I would render a seperate RGBA pass for each light.The idea here is a bit different. I'm leaving any light color info until post, so all I need to do is render the luminance (or diffuse/spec MINUS color) for each light. So I can use only one channel per light. It's a pretty easy setup actually. Only two passes (+ an amb occl pass, for fun)

First I'll take care of my color info. To do this I'll hide my three real lights and create an ambient light. You can do this from within the textures, by turning up the ambience, but I think it's much easier to turn off the ambience in the textures (you probly never had it on) and just make an ambient light. The only catch here is that the ambient light defaults to having an "ambient shade" value of 0.5, which sort of defeats the purpose.

So turn that to 0.0 and make sure you've got no other lights on and render.

So turn that to 0.0 and make sure you've got no other lights on and render.You'll get a pure color pass. Doesn't say much for my textures, but this is what I wanted.

This becomes the "base" for the lighting setup.

This becomes the "base" for the lighting setup.Now I'll take each light in turn and assign it's color to R, G, or B. My key light becomes pure red, fill pure green and rim pure blue.

Once each light is given a color, the render looks something like this.

It seems very colorful, but really that's only because we've got a "false color" thing going on. The keylight isn't really pure red, it's just in the red channel to get it to post. Same with the other lights. (BTW, if you have more lights, you'd just do another pass with other lights in the RGB channels. You'd just do this whole process twice or more). Here's what the individual channels look like:

It seems very colorful, but really that's only because we've got a "false color" thing going on. The keylight isn't really pure red, it's just in the red channel to get it to post. Same with the other lights. (BTW, if you have more lights, you'd just do another pass with other lights in the RGB channels. You'd just do this whole process twice or more). Here's what the individual channels look like:

Now once we take it to post we can see why this method is actually pretty powerful.

Here I'm using Shake, just cause it's easier to see stuff and a bit less work/layers for comping. Could easily be done in After Effects or whatever.

These are the only two layers I'm bringing for now (I'll bring in an AO pass later).

These are the only two layers I'm bringing for now (I'll bring in an AO pass later).The trick is to seperate out the channels for each light and use THAT to matte out the color pass. So the light (from each channel) actually reveals the pure color, or doesn't. Basically making our own diffuse color pass. In Shake that's done with a "reorder", in After Effect it'd be a "shift channel", I think. (BTW, I'm sure there are few other ways to do this . . .)

You can see I created three reorders and pump the light pass into each one. Each channel gets shifted to red for the key, green for the fill, etc. Each one of those goes into a copy of the color pass as a mask (so it has the correct colors to reveal). So I end up with this node tree. (note: below the color passes are some "switch matte" nodes just to put the correct alpha channel back. I also added the Ambient Occlusion pass at the bottom of the tree)

To clarify each color pass looks like this when matted with the correct light channel.

Since we're just adding "light" to the scene, you can then just "add" each of these passes to each other to get the final fully lit image. (In After Effects or Photoshop, I'll just use transfer mode "add" to lay one on top of the other.) As I noted, I multiplied an occlusion pass at the end, also.

Finally, we can get to what's cool ab0ut this . . . Here's the image as it stands:

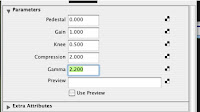

The power comes from my ability to now start adjusting each light/color pass. In Shake I just stick some nodes under each pass (in this case I just used a mult node to color each pass and fade node for the transparency of each pass):

By changing the transparency of each pass and the color of each pass you can end up with a huge array of options.

By changing the transparency of each pass and the color of each pass you can end up with a huge array of options.More theatrical?

More alien?

I changed all of that instantly, without any rerendering of anything. Notice that I've actually changed not only the color, but the intensity of each individual light on the fly. I could also change the gamma, etc for each light as I see fit, any color correction really.

I changed all of that instantly, without any rerendering of anything. Notice that I've actually changed not only the color, but the intensity of each individual light on the fly. I could also change the gamma, etc for each light as I see fit, any color correction really.This image is a bit dark and lo-res, etc, so it might not be the best example, but it shows the basic principles. There are lots of variations. You could do groups of lights per pass in a more complex scene, or render a FG pass, then add some subtle lighting effects to that with this method, etc. It's a great idea to render shadow passes this way by making the shadows from each light R, G, or B and seperating them in post. I wouldn't do this for everything, but for things where there's a more stylized look, I think the flexibility is invaluable. As I said, Josh and Eric rendered all of Fusion Fall this way.

Another bonus is the disc space it saves if you want this much control. Instead of an RGBA pass for each light you get three passes in one. I love the idea a reusing the channels for other things besides color. I'll do something later about RGB combo passes and ID mattes, etc. But that's enough tech stuff for now.