So here's another one I worked on for 5-6 hours yesterday and today. The sound is a bit low on this one for much of it (I may have to treat it to make it more clear) and it is, once again, about as long as I would want to go, but it's progressing. As always, this is audio of an actual conversation . . .

As I said previously, I'm not trying to make these any good from an animation POV, just sort of fun little things to watch. But one thing I'm finding interesting is that these require attention to a sort of secondary animation principle that I usually don't worry about too much. Because these are so static and there is so much reliance on the eyes and mouths, I find that it's really important to make sure that I'm at least doing a little to direct the viewer's eye to where it needs to be. I have to be really careful not to put extraneous motion away from the center of action. Subtle things seem to work OK to give some texture, but big movements will pull the eye all the way across the scene and make it harder to watch. Obviously, this isn't animation rocket science, but I though it was interesting that certain things become more clear when something is as simple as this. It's also a little more clear in the larger version (see link below) . . .

view the larger QT - here

Friday, September 19, 2008

Tuesday, September 16, 2008

Animation - The Needful Head Website/Trailer

Just for anyone that I haven't told yet, I made a site for "The Needful Head". It's not quite done (slouching my way to the finish line . . . ) but it Exists, and that's the important part.

I can't put the full film up there (it's still at fests around, just played in Taipei and in Germany in a couple of weeks and is scheduled to go onto iTunes soon), but there are pics and stuff there and some drawings from the book that Rich wrote and illustrated that aren't in the movie. The music is a bit annoying (Halli's music is wonderful, but unstoppable on the site at this point). I also slapped a trailer together, which is on the site. The link to the site is on the the right side, over there =====>>>

or www.TheNeedfulHead.com

Here's the trailer.

I can't put the full film up there (it's still at fests around, just played in Taipei and in Germany in a couple of weeks and is scheduled to go onto iTunes soon), but there are pics and stuff there and some drawings from the book that Rich wrote and illustrated that aren't in the movie. The music is a bit annoying (Halli's music is wonderful, but unstoppable on the site at this point). I also slapped a trailer together, which is on the site. The link to the site is on the the right side, over there =====>>>

or www.TheNeedfulHead.com

Here's the trailer.

Animation - noise and BofA animation WIP

I just found this "laying" around my hard drive. Kind of apropos, given the current banking crisis. . . It's from a job at a studio, which I won't name, that never amounted to much (this job, not the studio). I don't think it was ever finished (parts are even still in blocking). I had about a week or so to rig the character, plan, block and animate this little spot (obviously it's not a real render and minus any 2d text, logos, etc). It was proof of concept thingy. The people were nice, but the job wasn't much fun. I'm pretty sure nothing came of it (I spent some time later at Framestore and they did most of the B of A spots I've seen on TV, though I didn't work on them). I'm starting to gather my stuff from the last year to put on a new reel and came across this. There are some problems (the foot slipping at the beginning sucks), but there are bits that were OK, at least in theory.

The reason I remember this so well is that while I was working on this, there was no soundtrack for the spot yet. The studio I was working at played REALLY loud music. I never listen to music while I work, especially while I animate, so that was distracting, but the kicker was they wanted a really specific look to the drumming (don't know if I ever got it, or even if this is the last pass I did). So I was trying to work out the animation to a specific drum beat that didn't exist while listening to BLASTING techno! I nearly had a nervous breakdown. Live and learn. Now I go to studios with headphones, put them on and just listen to nothing. . . .

click here to see a slightly larger QT version - BofA animation QT

The reason I remember this so well is that while I was working on this, there was no soundtrack for the spot yet. The studio I was working at played REALLY loud music. I never listen to music while I work, especially while I animate, so that was distracting, but the kicker was they wanted a really specific look to the drumming (don't know if I ever got it, or even if this is the last pass I did). So I was trying to work out the animation to a specific drum beat that didn't exist while listening to BLASTING techno! I nearly had a nervous breakdown. Live and learn. Now I go to studios with headphones, put them on and just listen to nothing. . . .

click here to see a slightly larger QT version - BofA animation QT

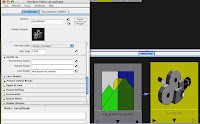

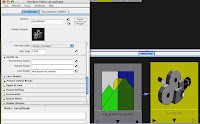

Technical - Figuring out Linear Workflow in Maya

Another techy thing. Just learning about this stuff and wanted to throw some stuff up about it. This is specifically Maya related (more specifically Mental Ray).

This is called Linear Workflow . . . I'll give you the most simple version (and hope that I don't get that too wrong).

Basically, the gist is this. When you're working in Maya (or most other 3d apps), the math that makes the images works in something called "linear" space. Okay . . . The problem comes from the fact that the images you're used to looking at generally come "corrected" or "adjusted", NOT in "linear" space. This is to compensate for the way computer monitors and tvs treat light vs. the way your eye see light. You've probably seen this idea either in calibrating your monitor or in a Photoshop image that's missing some info. The common space for monitor's correction is called sRBG (again you may have seen this term somewhere on your computer). Digital cameras and web photos, etc are usually all gamma corrected to sRGB (a value of 2.2), so they look correct to your eye. This gamma is basically the relationship of lights to darks (it's the center point slider in the levels control of photoshop). Correcting gamma to sRGB looks like it makes the image lighter, but it's actually more complex. It's really changing the gradient from dark to light. The blacks are still black and the whites are still white, but the stuff in the middle shifts brighter (sRGB or gamma 2.2) or darker (linear or gamma 1.0) to the eye. This comes into to play mostly when you're trying to render realistic images in a 3D program. Things don't work quite right.

So here's the basic, simplified deal (I'll show an example in one second).

I set up a scene with a few objects, each with an mia material and create an HDR environment. Here's what I get straight out of the box:

(click images for larger)

(click images for larger)

This is supposed to be correct, but when compared with what I think it should look like (based on the HDRI file), it's DARK.

Typically, one would just crank up the influence of the env HDRI or add some lights. But you shouldn't have to . . . that's the problem. Furthermore, the image is actually only too dark in the darker parts. That's because this image is LINEAR, and thus looks funny on your monitor. There is only one element of this image that actually matches what one would expect. That's the wooden floor. That's because this TIF file has already been gamma encoded (I know because it looks right in Preview, as I said most normal images are already gamma encoded). So Maya takes what you give it and computes and renders out in linear(dark) space.

To properly light and render this image, there are two steps (at it's most basic level):

Here's how I fixed the image above.

The first step is to "de-gamma" my texture file (the wood). Here's how. You should stick a Gamma Correct node in between the texture file and the material. Then connect the outColor of the image into the value of the Gamma Correct. Then change the "gamma" value of the correct node to 0.455 in all channels. This is to "de-gamma" the image (.45 is the inverse of the sRGB gamma, i.e. 1/2.2). Don't worry too much. 2.2 and 0.455 are really the only two numbers you need to know.

Repeat this step on every texture file you have. (there are other approaches, but this is the simplest.)

This is the image that you get now:

You'll notice that the only real difference is that the wood is actually darker. That was exactly the point. We want everything on the same page, the same working color space, in this case, linear. The image was looking dark and now the wood is equally as dark, because we removed the gamma correction that was built into the texture. Now we can add correction back (to sRGB) and the wood texture won't be brighter than the rest of the image. Got it?

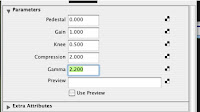

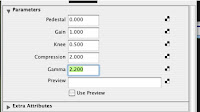

Now let's add that correction back, this time onto the camera itself. Select the render cam and open it's attribute editor. Twirl down the "mental ray" tab and we're looking for the "lens shader" slot.

Click on the checker box of the "lens shader" slot and add (from the MR list) an mia_exposure_simple node (you could also use the photographic version of the exposure, but simple works fine for now). Open it's attributes and change the gamma to 2.2. (some people seem to like the look of 1.8 better, but that's a seperate issue). This is correcting to sRGB (the way you normally see images).

So anything this camera renders will get a gamma of 2.2 added (which is why we had to remove the gamma from the texture file, otherwise we'd double it up). Since everything in our scene has been working in linear space, the image should look much more like one would expect a photograph of our scene to look.

Here's the image with gamma correction.

The image is much "brighter" and softer. The brightness is actually all in the midtones. The white parts and the black parts are all the same, just the gamma has changed. Adding the sRGB gamma makes the physical properties of the image behave much more like a photograph to our eyes, which was the point. The wood is now in line with the rest of the image, as well, unlike our first pass. And I never touched a light or any other setting save the gammas!

So in short, there are two things to do for the basics:

1. remove gamma corrections from your texture files

2. add gamma correction to your camera for the renders.

whew.

NOTE: These images were rendered in preview quality and took about 5 seconds each. There was no retouching or anything at all.

One could easily work in this mode then remove the gamma (switch the gamma back to 1.0) and render to float images for later gamma correcting in a comping program, or use different workflows entirely, but this was the easiest way for me to understand this.

NEW NOTE (thanks andrew, from 3dlight): You can also adjust the gamma at the framebuffer in mental ray render globals, but this works inversely (to add gamma of 2.2 (make the image lighter), you would have to change the setting to 0.455). In that case, you would not need to add all of the gamma correct nodes to your textures, it would correct those all for you, but you would need to turn your lens shader gamma back to 1.0. There is some difference in terms of what happens depending on whether your textures and/or output are float or LDR, but I'll leave that be for now. You can certainly get better info on that from the links below.

Some good posts for more about this stuff are:

http://3dlight.blogspot.com/2008/09/linear-workflow-for-maya-mental-ray.html

http://www.djx.com.au/blog/2008/09/13/linear-workflow-and-gamma/

http://www.floze.org/2008/07/six-tuts-on-light-and-shade-part-i.html

This is called Linear Workflow . . . I'll give you the most simple version (and hope that I don't get that too wrong).

Basically, the gist is this. When you're working in Maya (or most other 3d apps), the math that makes the images works in something called "linear" space. Okay . . . The problem comes from the fact that the images you're used to looking at generally come "corrected" or "adjusted", NOT in "linear" space. This is to compensate for the way computer monitors and tvs treat light vs. the way your eye see light. You've probably seen this idea either in calibrating your monitor or in a Photoshop image that's missing some info. The common space for monitor's correction is called sRBG (again you may have seen this term somewhere on your computer). Digital cameras and web photos, etc are usually all gamma corrected to sRGB (a value of 2.2), so they look correct to your eye. This gamma is basically the relationship of lights to darks (it's the center point slider in the levels control of photoshop). Correcting gamma to sRGB looks like it makes the image lighter, but it's actually more complex. It's really changing the gradient from dark to light. The blacks are still black and the whites are still white, but the stuff in the middle shifts brighter (sRGB or gamma 2.2) or darker (linear or gamma 1.0) to the eye. This comes into to play mostly when you're trying to render realistic images in a 3D program. Things don't work quite right.

So here's the basic, simplified deal (I'll show an example in one second).

- the program works and renders linear images (hence "linear workflow")

- to look correct, images must be gamma corrected

- therefore, you should be gamma correcting your output images when you render in 3d

I set up a scene with a few objects, each with an mia material and create an HDR environment. Here's what I get straight out of the box:

(click images for larger)

(click images for larger)This is supposed to be correct, but when compared with what I think it should look like (based on the HDRI file), it's DARK.

Typically, one would just crank up the influence of the env HDRI or add some lights. But you shouldn't have to . . . that's the problem. Furthermore, the image is actually only too dark in the darker parts. That's because this image is LINEAR, and thus looks funny on your monitor. There is only one element of this image that actually matches what one would expect. That's the wooden floor. That's because this TIF file has already been gamma encoded (I know because it looks right in Preview, as I said most normal images are already gamma encoded). So Maya takes what you give it and computes and renders out in linear(dark) space.

To properly light and render this image, there are two steps (at it's most basic level):

- I'll de-gamma my textures. This will make EVERYTHING in plain old linear space up until we hit the camera.

- Then I'll add a gamma correction to the camera lens. Everything that then renders will be properly gamma corrected.

Here's how I fixed the image above.

The first step is to "de-gamma" my texture file (the wood). Here's how. You should stick a Gamma Correct node in between the texture file and the material. Then connect the outColor of the image into the value of the Gamma Correct. Then change the "gamma" value of the correct node to 0.455 in all channels. This is to "de-gamma" the image (.45 is the inverse of the sRGB gamma, i.e. 1/2.2). Don't worry too much. 2.2 and 0.455 are really the only two numbers you need to know.

Repeat this step on every texture file you have. (there are other approaches, but this is the simplest.)

This is the image that you get now:

You'll notice that the only real difference is that the wood is actually darker. That was exactly the point. We want everything on the same page, the same working color space, in this case, linear. The image was looking dark and now the wood is equally as dark, because we removed the gamma correction that was built into the texture. Now we can add correction back (to sRGB) and the wood texture won't be brighter than the rest of the image. Got it?

Now let's add that correction back, this time onto the camera itself. Select the render cam and open it's attribute editor. Twirl down the "mental ray" tab and we're looking for the "lens shader" slot.

Click on the checker box of the "lens shader" slot and add (from the MR list) an mia_exposure_simple node (you could also use the photographic version of the exposure, but simple works fine for now). Open it's attributes and change the gamma to 2.2. (some people seem to like the look of 1.8 better, but that's a seperate issue). This is correcting to sRGB (the way you normally see images).

So anything this camera renders will get a gamma of 2.2 added (which is why we had to remove the gamma from the texture file, otherwise we'd double it up). Since everything in our scene has been working in linear space, the image should look much more like one would expect a photograph of our scene to look.

Here's the image with gamma correction.

The image is much "brighter" and softer. The brightness is actually all in the midtones. The white parts and the black parts are all the same, just the gamma has changed. Adding the sRGB gamma makes the physical properties of the image behave much more like a photograph to our eyes, which was the point. The wood is now in line with the rest of the image, as well, unlike our first pass. And I never touched a light or any other setting save the gammas!

So in short, there are two things to do for the basics:

1. remove gamma corrections from your texture files

2. add gamma correction to your camera for the renders.

whew.

NOTE: These images were rendered in preview quality and took about 5 seconds each. There was no retouching or anything at all.

One could easily work in this mode then remove the gamma (switch the gamma back to 1.0) and render to float images for later gamma correcting in a comping program, or use different workflows entirely, but this was the easiest way for me to understand this.

NEW NOTE (thanks andrew, from 3dlight): You can also adjust the gamma at the framebuffer in mental ray render globals, but this works inversely (to add gamma of 2.2 (make the image lighter), you would have to change the setting to 0.455). In that case, you would not need to add all of the gamma correct nodes to your textures, it would correct those all for you, but you would need to turn your lens shader gamma back to 1.0. There is some difference in terms of what happens depending on whether your textures and/or output are float or LDR, but I'll leave that be for now. You can certainly get better info on that from the links below.

Some good posts for more about this stuff are:

http://3dlight.blogspot.com/2008/09/linear-workflow-for-maya-mental-ray.html

http://www.djx.com.au/blog/2008/09/13/linear-workflow-and-gamma/

http://www.floze.org/2008/07/six-tuts-on-light-and-shade-part-i.html

Monday, September 15, 2008

Technical - Making Ilksville

Yes, there will be technical stuff here. I'll try to give fair warning (like the word "technical" in the heading). But since I enjoy knowing how other people do stuff, I figured I may as well show I do stuff.

Here I'm gonna walk through the basics of how we put Ilksville together. Here's where we started . . .

Audio is recorded on a little device (a Zoom H2, it's awesome. records in surround sound if you want! It's cheap and small and works like a charm). Just stuff with our friends for now, but we'll branch out and record other people and things as we go. We take that "wild" sound and listen to it and clip out a few interesting little snippets. I run those through Soundtrack pro and 2 semi-tones up of pitch correction to each. I take these snippets and we figure out which to use and then pick a character for each person (if they don't already have one) from the many pages of characters Rich has done and start to set them up . (BTW, I think this is basically how South Park is animated . . .)

This is one of the dozen of so pages of chars Rich started with. The character for Rich is third from the left on the bottom row.

This is one of the dozen of so pages of chars Rich started with. The character for Rich is third from the left on the bottom row.

(click pics for larger)

Then Rich redrew the character so I could put it back together as individual pieces.

I used the original template to piece this back together in seperate layers in Photoshop. Then I rendered each piece out in its own Tif file.

The next step is to go into Maya (3D program) and create a flat plane for each body part. I map the textures onto the planes. Here's a quick example.

Each plane is positioned and the pivots, etc are adjusted so the parts move as they should when rotated. Basically, every thing will rotate in only one plane. If we wanted to create a new arm shape we would draw a new arm and map that onto the flat plane. We're trying to stay away from that for now. Just keep it super simple.

Then I add in controls for each body part and link them as I would any character (parenting, point, orient and parent constraints) I try to lock off anything that won't be used so I don't confuse myself when animating. The only parts that are slightly different are the mouth and eyes. That's because these have so many shapes.

Then I add in controls for each body part and link them as I would any character (parenting, point, orient and parent constraints) I try to lock off anything that won't be used so I don't confuse myself when animating. The only parts that are slightly different are the mouth and eyes. That's because these have so many shapes.

For instance you can see here that the mouth has some new controls. I set up some enum controls on the mouth controller with each phoneme. This type of control works well because each shape will completely cancel the previous shape.

For instance you can see here that the mouth has some new controls. I set up some enum controls on the mouth controller with each phoneme. This type of control works well because each shape will completely cancel the previous shape.

I tried to use the bare minimum of shapes, as you can see. I then used set driven keys to drive the visibilities of the various mouth shapes (easier to me than switching textures).

The eyes were similar.

Each mouth shape and eye shape was drawn seperately in photoshop then imported and mapped onto a plane. Again there's probly an easier way to do this, but I couldnt' be bothered . . .

Each mouth shape and eye shape was drawn seperately in photoshop then imported and mapped onto a plane. Again there's probly an easier way to do this, but I couldnt' be bothered . . .

BTW, I copy each rig from a previous character and reshape it for the next one. Then I reproject the UV's and change the texture maps, so I don't start from scratch on each one. . .

The first pic is the orig. The second pic is the corrected one.

So here are the characters in the set. Everything is done from the front view, so there is no perspective at all. Everything is orthagonally flat.

So here are the characters in the set. Everything is done from the front view, so there is no perspective at all. Everything is orthagonally flat.

Since the set won't be rendered with the chars, I don't need to complete all the doodads before I render. I can go back and add in signs or whatever later.

Then I just start moving things around. I do the mouths first. Then just do runs on the body. I'll do the torso, then the arms, etc. Sometimes I'll animate straight ahead for a little while too. This one ("purses") was really long. It took about one day. "swayze" only took about 2 hours. I'm trying to animate as LITTLE as possible and still have it be watchable. I'll have to adjust the level of animation once I do a few more and figure out what works best. Trying not to be fussy at all. Bush-league, I know, but time is precious and stress sucks. The red hashes at the bottom are all hand animated points, so you get an idea of how many things need to be touched even for this level of animation. The timeline covers about 1 minute. Even keeping it simple, it's a lot of animation to do in a day.

The timeline covers about 1 minute. Even keeping it simple, it's a lot of animation to do in a day.

That's about it. As I said I render it out and comp it together with the final pic in after effects and mix the sound back in. Eventually I'll also be adding a quick title and end card to each one as well.

Here I'm gonna walk through the basics of how we put Ilksville together. Here's where we started . . .

Audio is recorded on a little device (a Zoom H2, it's awesome. records in surround sound if you want! It's cheap and small and works like a charm). Just stuff with our friends for now, but we'll branch out and record other people and things as we go. We take that "wild" sound and listen to it and clip out a few interesting little snippets. I run those through Soundtrack pro and 2 semi-tones up of pitch correction to each. I take these snippets and we figure out which to use and then pick a character for each person (if they don't already have one) from the many pages of characters Rich has done and start to set them up . (BTW, I think this is basically how South Park is animated . . .)

This is one of the dozen of so pages of chars Rich started with. The character for Rich is third from the left on the bottom row.

This is one of the dozen of so pages of chars Rich started with. The character for Rich is third from the left on the bottom row.(click pics for larger)

Then Rich redrew the character so I could put it back together as individual pieces.

I used the original template to piece this back together in seperate layers in Photoshop. Then I rendered each piece out in its own Tif file.

The next step is to go into Maya (3D program) and create a flat plane for each body part. I map the textures onto the planes. Here's a quick example.

Each plane is positioned and the pivots, etc are adjusted so the parts move as they should when rotated. Basically, every thing will rotate in only one plane. If we wanted to create a new arm shape we would draw a new arm and map that onto the flat plane. We're trying to stay away from that for now. Just keep it super simple.

Then I add in controls for each body part and link them as I would any character (parenting, point, orient and parent constraints) I try to lock off anything that won't be used so I don't confuse myself when animating. The only parts that are slightly different are the mouth and eyes. That's because these have so many shapes.

Then I add in controls for each body part and link them as I would any character (parenting, point, orient and parent constraints) I try to lock off anything that won't be used so I don't confuse myself when animating. The only parts that are slightly different are the mouth and eyes. That's because these have so many shapes. For instance you can see here that the mouth has some new controls. I set up some enum controls on the mouth controller with each phoneme. This type of control works well because each shape will completely cancel the previous shape.

For instance you can see here that the mouth has some new controls. I set up some enum controls on the mouth controller with each phoneme. This type of control works well because each shape will completely cancel the previous shape.I tried to use the bare minimum of shapes, as you can see. I then used set driven keys to drive the visibilities of the various mouth shapes (easier to me than switching textures).

The eyes were similar.

Each mouth shape and eye shape was drawn seperately in photoshop then imported and mapped onto a plane. Again there's probly an easier way to do this, but I couldnt' be bothered . . .

Each mouth shape and eye shape was drawn seperately in photoshop then imported and mapped onto a plane. Again there's probly an easier way to do this, but I couldnt' be bothered . . .BTW, I copy each rig from a previous character and reshape it for the next one. Then I reproject the UV's and change the texture maps, so I don't start from scratch on each one. . .

In terms of the backgrounds we're just using anything that seems to work. In this case I took a photo at the Museum of Natural History and tweaked it out. Sorry kid. Whoever you are.

The first pic is the orig. The second pic is the corrected one.

I use these in Maya to layout the scene, but don't render them. I only render the chars and props then comp it all in after effects, adding shadows, etc.

So here are the characters in the set. Everything is done from the front view, so there is no perspective at all. Everything is orthagonally flat.

So here are the characters in the set. Everything is done from the front view, so there is no perspective at all. Everything is orthagonally flat.Since the set won't be rendered with the chars, I don't need to complete all the doodads before I render. I can go back and add in signs or whatever later.

Then I just start moving things around. I do the mouths first. Then just do runs on the body. I'll do the torso, then the arms, etc. Sometimes I'll animate straight ahead for a little while too. This one ("purses") was really long. It took about one day. "swayze" only took about 2 hours. I'm trying to animate as LITTLE as possible and still have it be watchable. I'll have to adjust the level of animation once I do a few more and figure out what works best. Trying not to be fussy at all. Bush-league, I know, but time is precious and stress sucks. The red hashes at the bottom are all hand animated points, so you get an idea of how many things need to be touched even for this level of animation.

The timeline covers about 1 minute. Even keeping it simple, it's a lot of animation to do in a day.

The timeline covers about 1 minute. Even keeping it simple, it's a lot of animation to do in a day.That's about it. As I said I render it out and comp it together with the final pic in after effects and mix the sound back in. Eventually I'll also be adding a quick title and end card to each one as well.

Ilksville - "Purses" testing

Here's the second one. This one is rougher and much longer. the render here is straight out of Maya, so there's less integration with the chars and the props, etc. But you get the Idea . . .

This is about as long we'd be wiling to go on any one clip. The point is to be fun and quick, not to belabor the process or the story. This one is of our friends Ana and Melissa. Again just a randomly captured snippet of dialogue. We've decided that for the time being, all props and environments will be photos, not drawings, though as I said, they're not quite properly integrated yet in this clip.

At some point we'll work out intros to the clips and package them up a bit. Just starting to talk about that now. Obviously, the whole idea of the thing is evolving a bit as we go. . .

View the larger QT - here

This is about as long we'd be wiling to go on any one clip. The point is to be fun and quick, not to belabor the process or the story. This one is of our friends Ana and Melissa. Again just a randomly captured snippet of dialogue. We've decided that for the time being, all props and environments will be photos, not drawings, though as I said, they're not quite properly integrated yet in this clip.

At some point we'll work out intros to the clips and package them up a bit. Just starting to talk about that now. Obviously, the whole idea of the thing is evolving a bit as we go. . .

View the larger QT - here

Ilksville - "Swayze" testing

Here's a small sample of what we're on about. This is the first test I did.

The basic idea is that these videos are just little excerpts from the lives of the residents of Ilksville. This one has me, Rich and our friend Melissa. The sound probably isn't quite loud enough, but this is just the first test. And the whole point is that this is "wild" sound. Just caught while the recorder was running. The animation is also crap, but the whole point is that we should be able to do an entire clip in about a day. I'm rigging the characters in Maya (a 3D program), which I'll explain in another post someday, but aside from that, we're trying to be Breezy about this whole thing and just accumulate interesting tidbits whenever we have the time.

View the large QT version - here

The basic idea is that these videos are just little excerpts from the lives of the residents of Ilksville. This one has me, Rich and our friend Melissa. The sound probably isn't quite loud enough, but this is just the first test. And the whole point is that this is "wild" sound. Just caught while the recorder was running. The animation is also crap, but the whole point is that we should be able to do an entire clip in about a day. I'm rigging the characters in Maya (a 3D program), which I'll explain in another post someday, but aside from that, we're trying to be Breezy about this whole thing and just accumulate interesting tidbits whenever we have the time.

View the large QT version - here

Ilksville - the beginning

Last year at a film festival with "The Needful Head", my main collaborator, Richard Bailey, showed me about a dozen pages of drawings he had recently done. They were just rows and rows of funny little people. He was calling them "Ilksville", but had no idea what to do with them. I loved the drawings and after months of fits and starts we've Decided on this concept:

- 1. Ilksville will be about the town/place called Ilksville(duh), the residents of which are the little people in the drawings.

- 2. the whole point is to be quick and loose with the project. Never to worry overmuch about what we're doing. We'll do that with other projects. This should be fun and "easy".

- 3. Rather than worry to much about a plot, we will just periodically record audio of us and our friends while we're hanging out, etc. We'll then take small snippets of that audio and quickly animate Richard's drawings to that clip.

- 4. Eventually the clips will be collected into a website or some other repository where people can browse through them. We'll wait a bit to see when this will happen.

- 5. The characters will be consistent throughout, so viewers can get to know them, as they would a real person. No acting or cherrypicking dialogue to create false impressions.

Getting Started

Good lord. Another blog foisted on the world. . . So sue me.

Just seemed like a good place to keep a bunch of work in progress and 3D/animation tidbits for those who were interested, rather than hiding things deep in my own site.

Just seemed like a good place to keep a bunch of work in progress and 3D/animation tidbits for those who were interested, rather than hiding things deep in my own site.

Subscribe to:

Comments (Atom)